Andrej Karparthy Recommends Books (x.com)

This thread is the reason I read the 1000+ page Atlas Shrugged by Ayn Rand. Great recommendations, somewhat hard to find in a random twitter thread.

This thread is the reason I read the 1000+ page Atlas Shrugged by Ayn Rand. Great recommendations, somewhat hard to find in a random twitter thread.

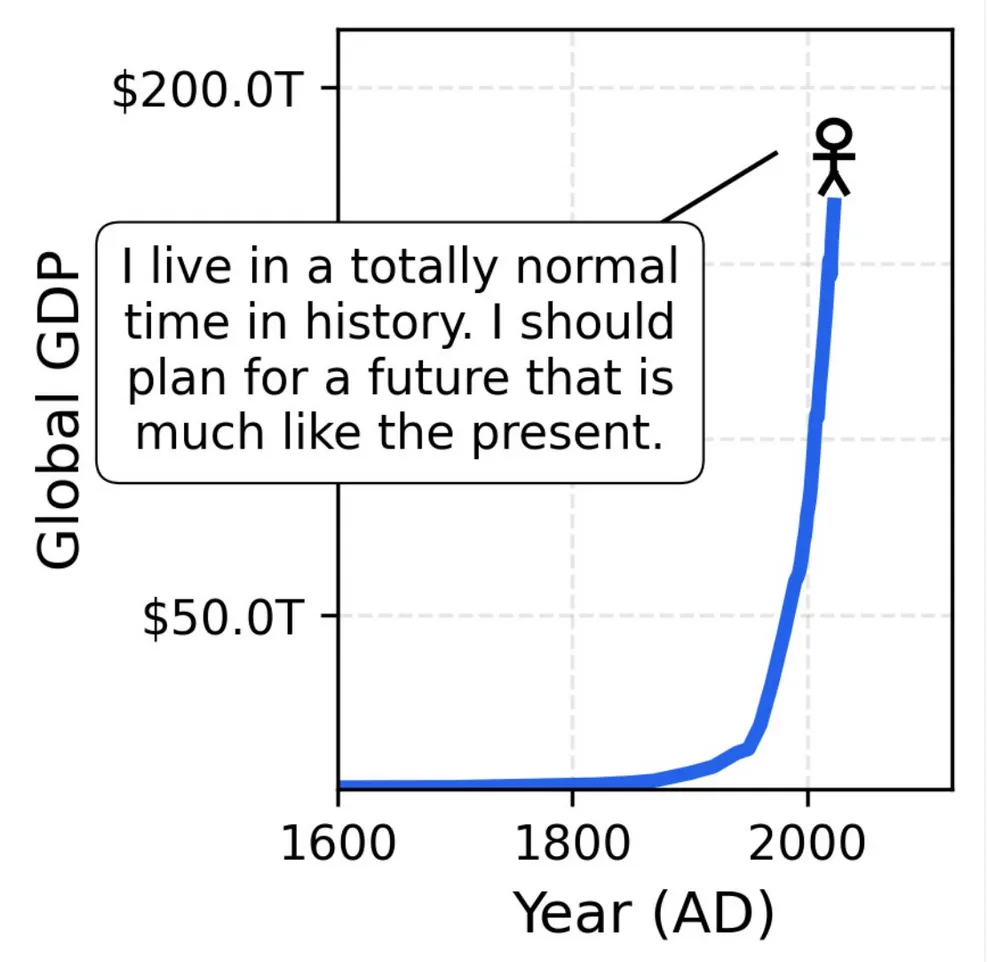

I simply can’t get over this image. I saw it first in this thread by Jascha Sohl-Dickstein and already mentioned it in my post about bubbles. But even months later I have to think of this: in what unprecedented times we live, and yet how we take so many things for granted.

When committing to a platform, think about how much ownership and access you retain over your data. Are you locked in? Can you get locked out? Can you process your data in any way you like?

Slack workspace is quite popular, but they implemented ridiculous API rate limits for “non-approved” apps. The limit is one request per minute (lmao), affecting (e.g., reading) at most 15 messages per minute. And this is for a strictly paid product, where each seat is billed. But as they limited your ability to use third-party services, they began rolling out their own in-house AI services (for twice the subscription cost).

I like retaining control over my data. For notes, this is quite easy. Instead of using something like Notion (where you can’t retrieve any files if you are logged out) or Apple Notes (where your account of 20 years can get locked over redeeming a gift card), you can take your notes in Obsidian. With Obsidian, everything is stored in plain-text markdown files. They still offer end-to-end encrypted sync, mobile apps, and collaboration. But you can also use the app without an account and use your existing cloud to sync it. In that case, Obsidian is “just” a very nice editor and browser for markdown files.

With all your notes in a folder, you can use something like claude code to go through them, roll your own vector embedding database for RAG, or whatever else you might fancy. It’s your data; do whatever you want.

For chat it’s a bit trickier. I think the best you could do is self-host an instance of Mattermost or Element, which will involve more significant drawbacks though.

With uv it’s straightforward to try different python flavours, i.e., the free-threaded version introduced in 3.13 or the jit-compiled pypy with versions up to 3.11. Just run

uv python install 3.14t for the free-threaded version or uv python install pypy3.11 for the latest version of pypy.

Since astral has now officially announced the beta of ty, i’d like to share my current setup of amazing and fast tools:

pixi add --pypi x).All of these are built in rust and just generally nice to use.

Honorable mention to loguru for being a logger that I actually can remember how to use (from loguru import logger; logger.info('hello')).

Claude Code is now telling users that they have free one-week passes to hand out. Great marketing and nice ASCII art

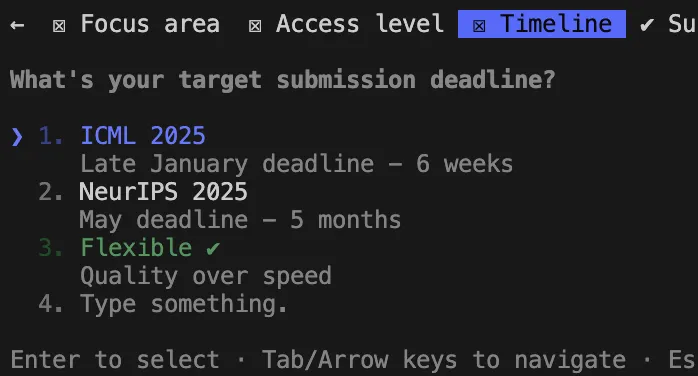

LLMs are not human. But they imitate human behaviour in many ways. You can threaten an LLM, you can argue with them, and you can offer a tip for a great answer, all of which will impact what sort of result you get. Recently my Claude Code has been obsessed with timelines. I don’t know why, because all those three-phase, eight-week time schedules are implemented in 15 minutes anyway, but it keeps coming up with them.

Today Claude asked me when I want to submit my work (because I said I want paper-ready figures). Naturally I would never admit to being short on time to an LLM. Just say it has all the time in the world to make them look perfect. This whole dance is becoming a bit bizarre, but keep in mind that an LLM will imitate human patterns, so don’t make your LLM produce sloppy and rushed-looking work by telling it you have little time.

It doesn’t take any real time to give your LLM infinite time.

Gradient Checkpointing is a technique to trade off speed for reduced VRAM usage during backprop. During backprop, we usually keep the forward activations of all layers preceding the ones we computed the gradient for in VRAM, since we will need them during later steps of backpropagation. We can reduce VRAM usage by discarding these earlier activations and recomputing them later, when we require them. A middle ground between computing everything again and keeping everything in VRAM is keeping only certain checkpoints in VRAM. The linked repo has a great animation showing the whole process. PyTorch has this implemented as activation checkpointing (which is a more reasonable name). In their blog they also mention that they offer an automatic Pareto-optimal tradeoff for a user-specified memory limit! (although the config seems to have a different name in the code than mentioned in the blog)

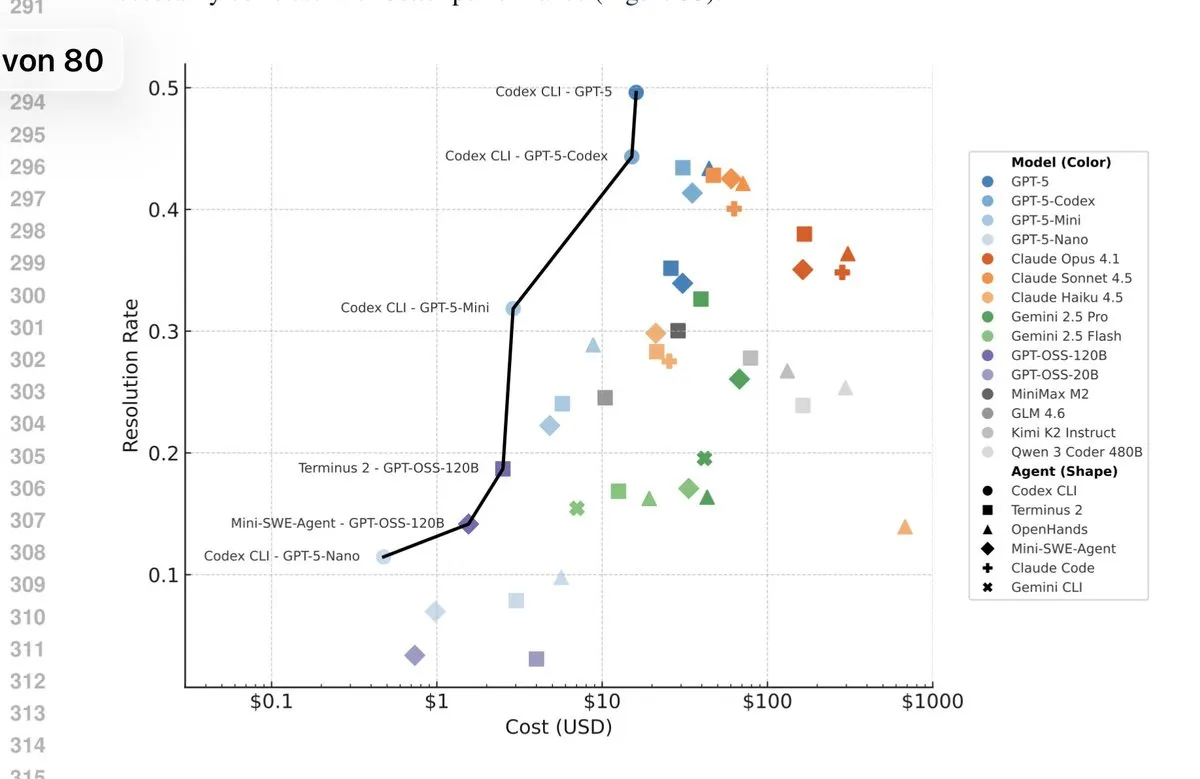

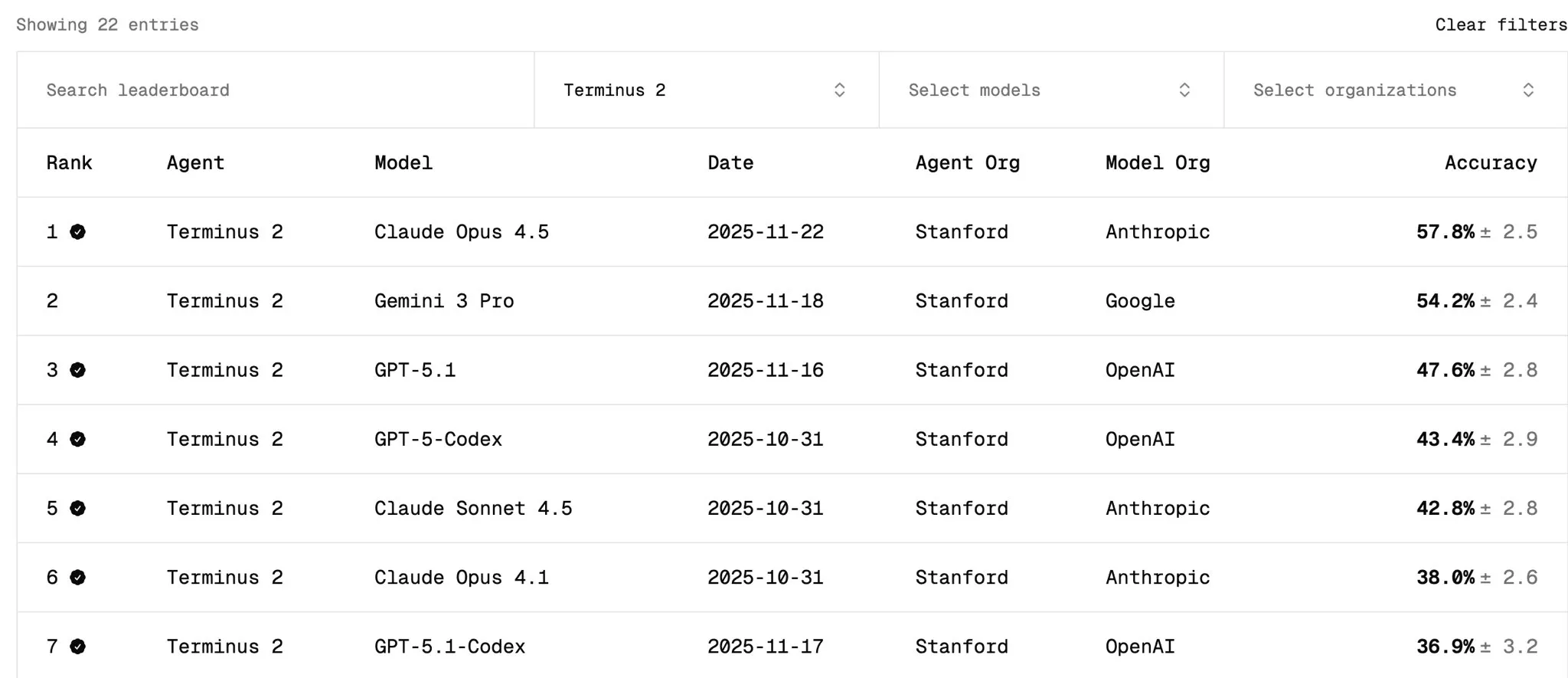

The latest version of the terminalbench submission to ICLR has a very GPT-pilled pareto frontier.

TerminalBench not only measures model performance, but also the agent used. If we compare everything in the Terminus-2 agent on the tbench.ai homepage, wee see that Gemini 3 Pro should outperform GPT-5 in terms of raw model performance (Opus 4.5 is not part of the submission yet).

I have two thoughts on this:

Pickle is (/was?) a widespread file format in the Python ecosystem. It is immensely flexible, as you can pickle a lot of things (but not everything as I learned using submitit). But that flexibility comes at the cost of security, as pickle files can contain arbitrary code instructions. Huggingface has a great post (the link of this note) covering this and their scanner for potentially dangerous pickle files. They also have a file format called safetensors (because pytorch tensors can also contain code…).

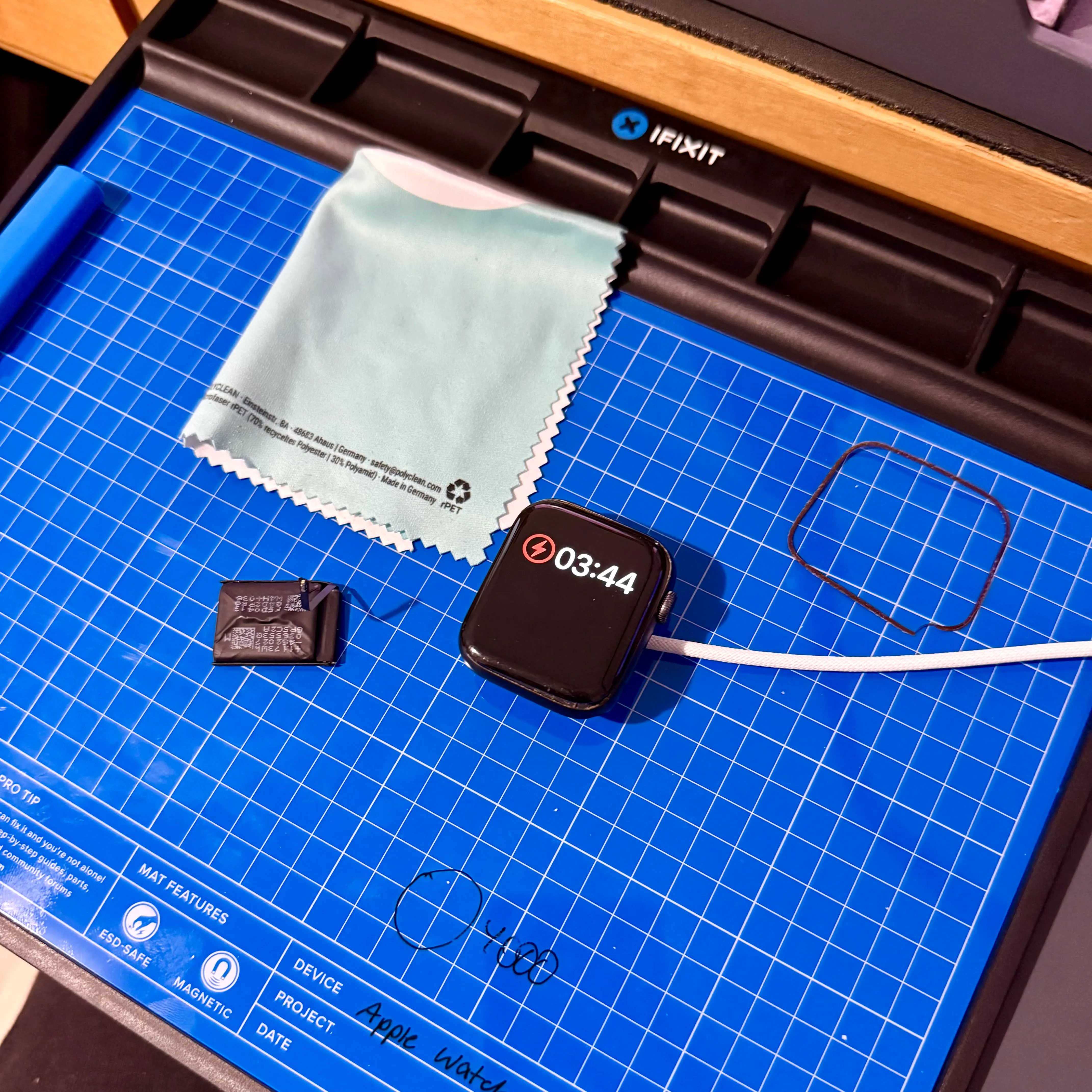

Since I like repairing electronics I’m happy to have learned that iFixit now has an app that makes it even easier for people to get into it. It explains all the necessary basics and even comes with a multi-modal AI chatbot: You can share an image of your problem and it will help you diagnose and remedy the problem, all based on the extensive information that iFixit Guides have for countless devices.

Claude Code now has asynchronous subagents, meaning the main agent can spawn subagents (this is not new) that keep running in the background (this is new). I don’t know if Anthropic has imposed a limited on this feature (they probably don’t have to, since I’ll burn through my usage much faster…), but for me it definitely has replaced some API use cases. I managed to have it spawn over 100 subagents to process a bunch of documents. Not sure if that is what they intended it for, but it’s nice!

I love repairing electronics. Something about these elegant devices coming apart at the correct use of the proper tools, looking inside them, and the pride of looking at something you have assembled yourself…

To replace the battery of this Apple Watch I used an ATTEN ST-862D SMD reworking station (basically a very fancy hot air gun intended for (de-)soldering surface mount components), an Excel No. 10 curved blade, the iFixit Mako Precision Bits, their magnetic project mat, and their assortment of prying and opening tools. Putting a razor blade with force to a glass screen and prying it open is scary, and you should definitely wear eye protection in case glass shards go flying everywhere, but after turning up the heat to 150°C, it opened easily.

It’s probably economically not viable to do this yourself or to replace the battery at all. This watch is like five years old, it has little value. Apple would charge 100 € to replace the battery, more than the whole watch costs. But the battery itself costs just 20 €, and with the right tools, it took me about 20 minutes. And it seems dumb to throw away perfectly fine electronics over a weakening battery.

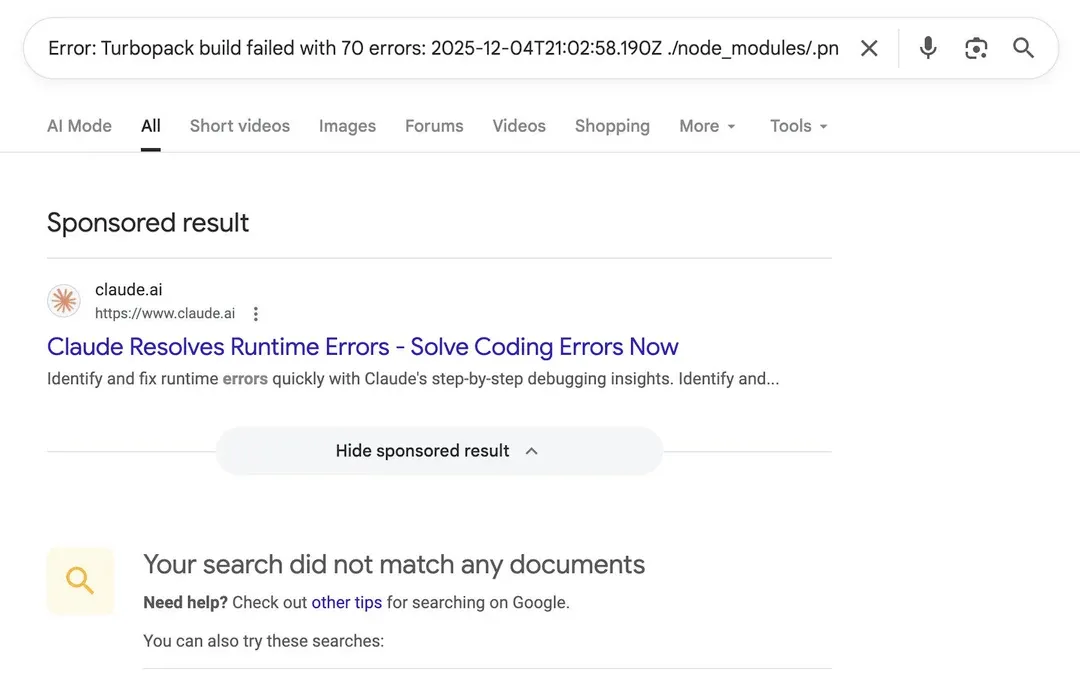

Intent-based advertising means capturing and converting a user based on something they intend to do or to acquire. It doesn’t work for every product, because often potential users are not aware of the problem and don’t go out looking and intending to do something about it. Anthropic uses an interesting intent to advertise Claude (per twitter), namely that they bid on searches for stack traces.

So if someone searches for a stack trace with no results, they are served a Claude ad (which is, admittedly, very good at solving those!). It’s a genius way of indirect intent-based ads. Those ads are probably very cheap as well (for now), because the price is determined by your competition on those keywords (it’s a bidding process, albeit with one entity simulatenously owning the marketplace and supply).

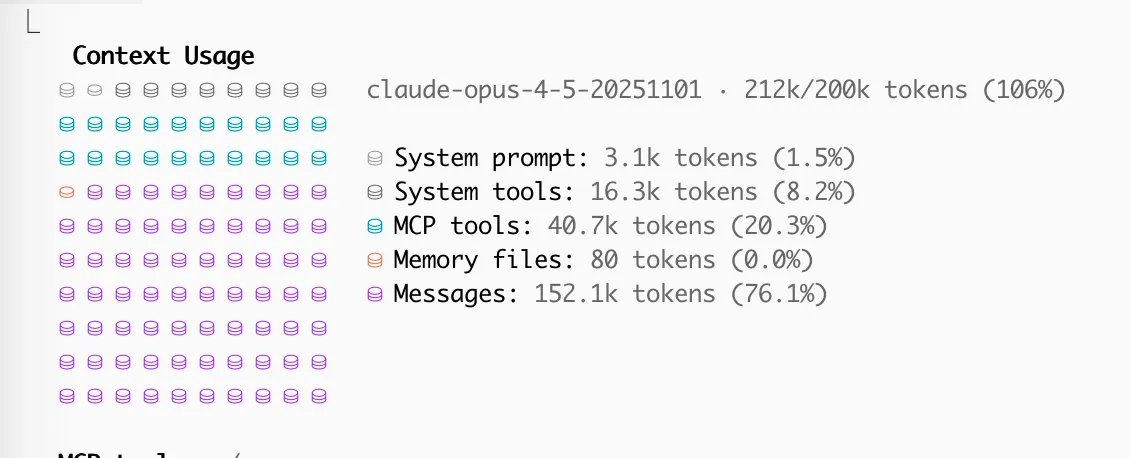

Claude Code has an auto-compact feature. If you run into the last 20% or so of the context window limit, upon finishing the generation it will automatically compact all previous messages. This is mostly fine, but it can happen at very awkward spots and then degrates performance, which is why I turned it off. You can instead/additionally manually compact with optional instructions, like /compact keep parts relevant to the DB migration.

I don’t know if this is intended or not, but disabling auto-compacting also seems to let you go over the context limit? As you can see I got up to 106%. It’s possible that it’s just cutting off the earliest context then, but at least it’s good to know that you can keep going without compacting.

Anthropic’s Societal Impacts team currently runs interviews with Claude.ai users and shared some insights from their initial interviews. The interviewer is Claude. It’s a fun exercise to think about AI in general and maybe shape it a bit.

First, of course, I asked Claude for what it has been prompted to do:

Warm-up: What’s the last thing you used an AI chatbot for? (You already answered—implementing code) Vision question: If you could wave a magic wand and AI could help you with anything in your life, what would you choose? (With follow-ups to understand the deeper value/aspiration behind your answer) Positive experience: Can you tell me about a time you’ve worked with AI that felt like a step toward that vision? Concerns: Are there ways that AI might be developed or deployed that would be contrary to your vision or what you value? (With follow-ups to understand why) Optional: I might go back to something interesting you mentioned earlier to explore it deeper Wrap-up: Any final thoughts on AI’s role in your life or society? The goal throughout is to understand your perspective through follow-up questions that dig into the “why” behind your answers—what matters to you and what drives your thinking.

Part of my answer if I could wave a magic wand and AI could help with anything:

I want to drive and ideate, and determine what’s important, but I want AI to design, to implement, to give me things to iterate on and adjust based on my taste and values.

I found myself reaching for a metaphor, thinking of the book Atlas Shrugged:

It is like a man, a train conductor, gripping to the control of a train, controlling thousands of horse power to move hundreds of people; but for the mind.

Someone once told me AI would turn me from a PhD student working in the trenches on one project at a time to a professor orchestrating fleets of AI students. That framing stuck with me:

A lot of AI debate is about what gets lost. […] That metaphor frames it the other way around: All PhD students will become professors! Science will 100x.

But I’m not naively optimistic (I hope?). I listed what would be horrible: AI deciding over humans, mass surveillance, social scoring, and delegating thinking to AI.

I delegate things I understand. […] Delegating thinking would mean having AI come up with some formula or math or function, which you have no intellectual way to grasp. You rely on the AI to be correct. You don’t learn. You don’t think.

There are two ways to tackle a problem with AI:

1 . You give the task to AI, it manages to solve it (because AGI) and you have a solution. 2. You look at the task, you don’t understand something, you ask the AI to help you understand. […] In the latter, man has grown and become stronger, learned something new and useful. […] In the former, we become weaker, our thinking atrophies.

I also raised fears about surveillance in particular:

I think it increases the stakes. War was always horrible. The atomic bomb, cluster bombs, napalm, chemical weapons upped the stakes. All those human rights abuses were already happening and horrible, and AI ups the stakes.

With Fast Forward Computer Vision (ffcv) you can train a classifier on CIFAR-10 on an H100 in ~14 seconds. They report in their CIFAR-10 example:

92.6% accuracy in 36 seconds on a single NVIDIA A100 GPU.

ffcv achieves that by speeding up the data loading with various techniques, so you can re-use most of your training code and just replace the loading, as this example from the quickstart shows:

from ffcv.loader import Loader, OrderOption

from ffcv.transforms import ToTensor, ToDevice, ToTorchImage, Cutout

from ffcv.fields.decoders import IntDecoder, RandomResizedCropRGBImageDecoder

# Random resized crop

decoder = RandomResizedCropRGBImageDecoder((224, 224))

# Data decoding and augmentation

image_pipeline = [decoder, Cutout(), ToTensor(), ToTorchImage(), ToDevice(0)]

label_pipeline = [IntDecoder(), ToTensor(), ToDevice(0)]

# Pipeline for each data field

pipelines = {

'image': image_pipeline,

'label': label_pipeline

}

# Replaces PyTorch data loader (`torch.utils.data.Dataloader`)

loader = Loader(write_path, batch_size=bs, num_workers=num_workers,

order=OrderOption.RANDOM, pipelines=pipelines)

# rest of training / validation proceeds identically

for epoch in range(epochs):

...I somehow missed this, but OpenAI stated on October 22nd that they are no longer legally obliged to retain all outputs. The legal action by the New York Times led to a court order that compelled them to do so.

So Antigravity by Google will let the agent “auto-decide” what commands to execute and which commands require approval. It also does not use a sandbox. It didn’t take very long for the first Reddit post about a whole drive being deleted by the agent arriving. Meanwhile Claude Code is going the complete other direction: rigorous permission systems and a sandbox on top. Anthropic explains this in more detail in their blog, but basically they argue that you need filesystem and network sandboxing, because bypassing one would also mean bypassing the other (it’s trivial for linux because everything is a file, but holds more generally).

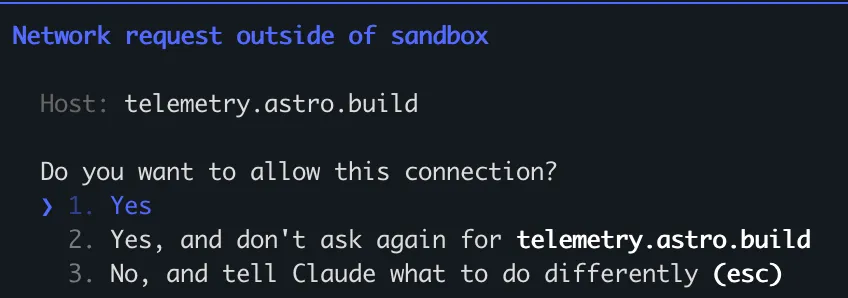

Just running an npm run build will trigger a sandbox request if a telemetry request is being made. git commit needs to use the non-sandbox fallback, because it uses my key for signing the commit, which is not available from within the sandbox. They always offer a sensible “always allow” because they are acutely aware of Approval Fatigue. It’s a good approach and makes me feel a lot safer.

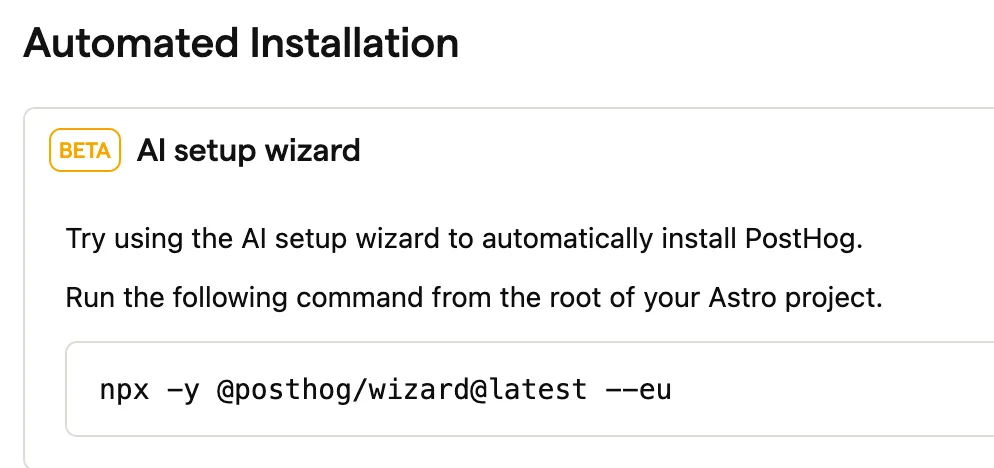

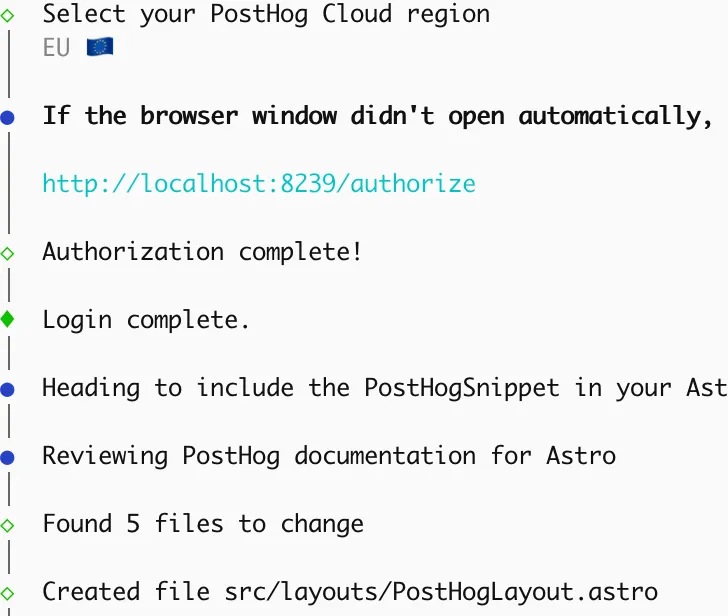

If you want to set up PostHog on your website, you usually have to install a package, add the imports, etc. Posthog has fully integrated an AI agent into their install process, so you invoke an AI agent as the installation step.

An agentic eval that compares agents by how well they can build a Counter-Strike clone.